Build your first embedded data product now. Talk to our product experts for a guided demo or get your hands dirty with a free 10-day trial.

It’s hard to scroll through your social media feeds without seeing a post about AI or ChatGPT. How to create sales email templates, blogposts, debug code,... The list of use cases for generative AI tools seems endless. McKinsey estimates that generative AI could add $2.6 trillion to $4.4 trillion to the global economy annually across 63 use cases.

How about using AI for data analysis?

In this article, we’ll explore why AI is great to speed up data analysis, how to automate data analysis each step of the way, and which tools to use. Let’s jump in.

As data volumes grow, data exploration becomes harder, slower, and more dependent on specialist skills. AI data analysis refers not only to using artificial intelligence to process large datasets, but also to using AI to change how people interact with data and who can analyze it.

On the technical side, AI data analysis uses techniques such as:

But AI data analysis goes further than modeling and prediction.

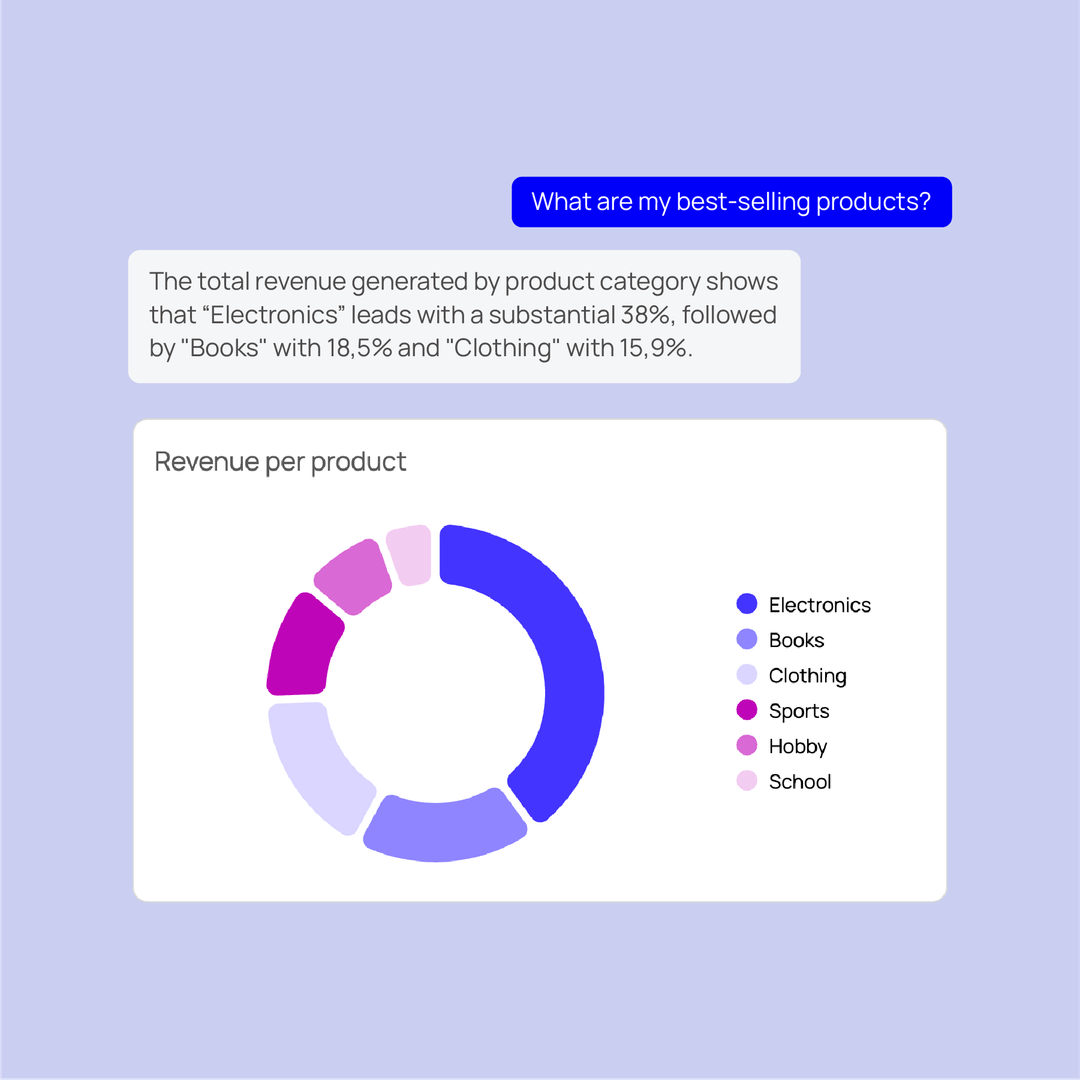

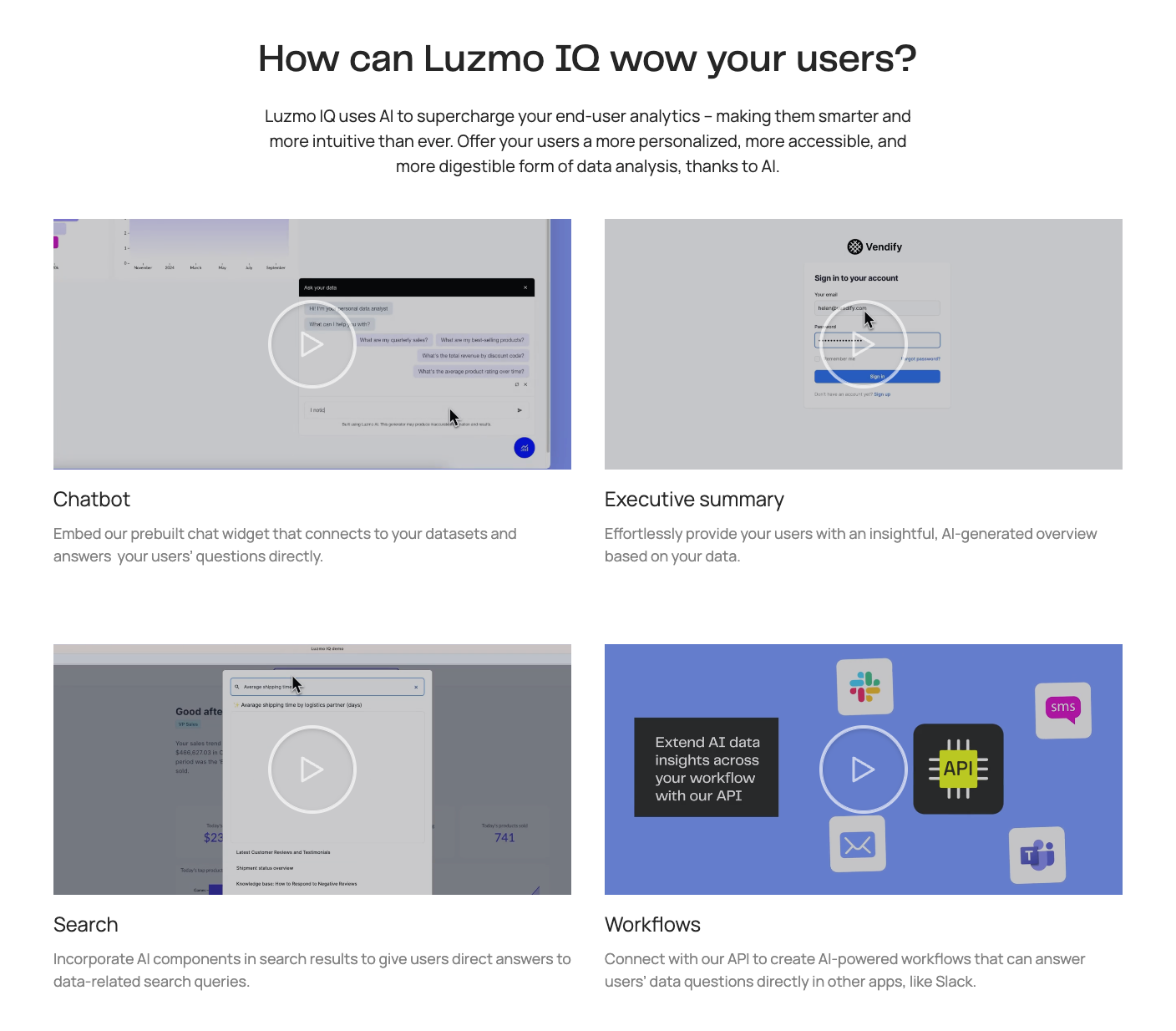

Modern AI-powered analytics also focus on unlocking data for a wider audience. Instead of changing the data itself, AI changes the experience of analysis. Intelligent agents can explore datasets, surface insights, suggest next questions, generate charts, and explain results automatically. Platforms like Luzmo IQ provide this kind of AI-powered exploration, while Luzmo Studio lets teams turn those insights into interactive dashboards embedded directly into their products. That means users no longer need to know SQL, BI tools, or data science workflows to get value from data.

In practice, AI data analysis combines:

The result is analytics that shift from a specialist task to a shared capability — faster, more accessible, and closer to everyday decision-making.

Imagine you work in a warehouse that stores and distributes thousands of packages daily. To run your warehouse procurement better, you want to understand things like:

Traditionally, answering these questions requires manual queries, dashboards, or analyst support. With AI-driven analytics, much of this exploration can happen automatically: patterns surface faster, anomalies get flagged early, and follow-up questions can be answered on demand.

So, the adoption of AI-driven insights is paving the way for the warehouse of the future, where operations are optimized through real-time data analysis and predictive analytics.

AI often feels intimidating when paired with data analysis. Some teams see AI as “magic” that just works, while they experience data analysis itself as complex, slow, or hard to trust. In reality, both perceptions can reinforce each other in unhelpful ways.

When AI is treated as a black box, and data foundations remain unclear, results feel unpredictable. When data analysis feels inaccessible, AI outputs feel harder to validate. Understanding the benefits of AI-powered analytics helps cut through both issues — not by promising miracles, but by showing where automation, speed, and accessibility genuinely add value.

Many teams already feel blocked by their current analytics setup. According to our research, 51% of users say their biggest frustration is that dashboards don’t let them interact meaningfully with data. Another 37% say insights aren’t actionable, while 36% complain dashboards are simply too slow.

It’s a clear signal: people want analytics that go beyond static charts, and AI, when used right, can unlock faster speed to insight.

AI-powered analytics relies on well-collected and well-prepared data. At the same time, its impact is felt where insights are generated and decisions are informed.

For that reason, we begin with analysis and insight, and then move to the underlying data processes that enable them.

In practice, this is where most teams start. They review existing insights, usage analytics, or AI-generated answers to understand what’s happening and where questions remain. AI-powered data analysis turns this audit step into something fast, interactive, and accessible.

When data is clean, organized, and flowing through a reliable pipeline, AI can help transform raw numbers into actionable insight. Modern analytics — especially when augmented with AI — makes it possible to analyze large and complex datasets to identify patterns, correlations, anomalies, and trends that would be difficult or time-consuming to detect manually.

This can include:

AI dramatically reduces the time between “data collected” and “insight delivered.” What traditionally required hours or days of analyst work — writing SQL queries, aggregating data, slicing dashboards — can now happen in seconds.

Just as importantly, AI brings accessibility. With natural-language querying and intelligent decision logic, non-technical users such as product managers, sales, operations, or marketing teams can explore data on their own. Analytics is no longer limited to specialists; it becomes usable across the organization (and even inside customer-facing products) without requiring deep data expertise.

Luzmo IQ is built to transform this analysis phase — from data to insight — in an accessible, user-friendly way:

Combined with AI-augmented analysis, tools like Luzmo IQ turn analytics from a specialist task into a core part of how decisions are made: fast, democratic, and scalable.

Go even further with AI-powered embedded analytics: Meet Luzmo’s Agent APIs

If dashboards are the “view to your data,” Luzmo’s Agent APIs give you the building blocks to design how intelligence works inside your product. They aren’t an alternative interface to Luzmo IQ — they let you move beyond any predefined analyst experience and build your own analytics workflows, UX, and interactions.

Instead of being constrained to static charts or fixed query flows, the Agent APIs expose modular AI capabilities that you can combine however your product needs. You decide when AI steps in, what it does, and how results appear in your UI.

With the Agent APIs, you can build workflows such as:

For SaaS product teams, this means analytics becomes a native product capability rather than an embedded tool. Intelligence can live directly inside existing screens, flows, and decisions — whether that’s a reporting view, an operational workflow, or a guided assistant.

This “agentic analytics” approach shifts analytics from something users visit to something that works alongside them. Teams can start small, then scale toward fully custom, AI-powered analytics experiences over time. Because the APIs are modular and API-first, you retain full control over UX, security, permissions, and integration, while giving users flexible ways to explore and act on their data.

Once analysis surfaces patterns, trends, or anomalies, visualization is what turns those findings into insight people can actually understand and act on. Raw tables or spreadsheets rarely communicate meaning on their own. Visual representations help translate complex data into something that’s easier to interpret, discuss, and use in decision-making.

Effective visualization matters because it:

A clear overview of what matters. Whether insights appear in a dashboard, a report, or directly inside a workflow, users need an immediate sense of what’s happening. Key metrics, trends, or signals should summarize business or operational health without requiring extra interpretation.

Ability to explore and follow questions. Good analytics supports curiosity. Users should be able to drill down, filter, compare, and ask follow-up questions wherever insights appear — without switching tools, exporting data, or opening a separate BI interface.

Flexibility across workflows and users. Analytics should adapt to how people work. Sometimes that means dashboards, but it can just as well mean a chart embedded in a product screen, a contextual insight inside an operational flow, or an explanation triggered by a question. The experience should remain intuitive and accessible, even for non-technical users such as managers, operators, or clients.

Speed and clarity at the moment of action. Analytics delivers the most value when it fits naturally into fast-moving workflows. Lightweight charts, visual summaries, or concise explanations help users understand what’s happening and decide what to do next — without slowing them down.

With Luzmo, analytics isn’t limited to standalone dashboards.

Our embedded analytics platform lets you surface charts, KPIs, and AI-driven insights directly inside your application — wherever users already work. That can be a dashboard, but it can also be a product screen, a workflow step, or a guided experience tied to a specific action.

Non-technical users can still build or customize visualizations when dashboards make sense, while product teams retain full control over where and how analytics appears. As data volume, use cases, or user needs grow, the analytics experience scales without heavy engineering overhead.

While descriptive analytics explains what’s happening now, predictive analytics focuses on what’s likely to happen next. This is where artificial intelligence adds a clear advantage over traditional reporting. By learning from historical patterns, AI can forecast future outcomes and surface risks or opportunities before they fully materialize.

Predictive analytics uses models trained on past behavior, trends, and contextual signals to anticipate future states. Instead of reacting to changes after they occur, teams can plan ahead and act earlier.

Common use cases include:

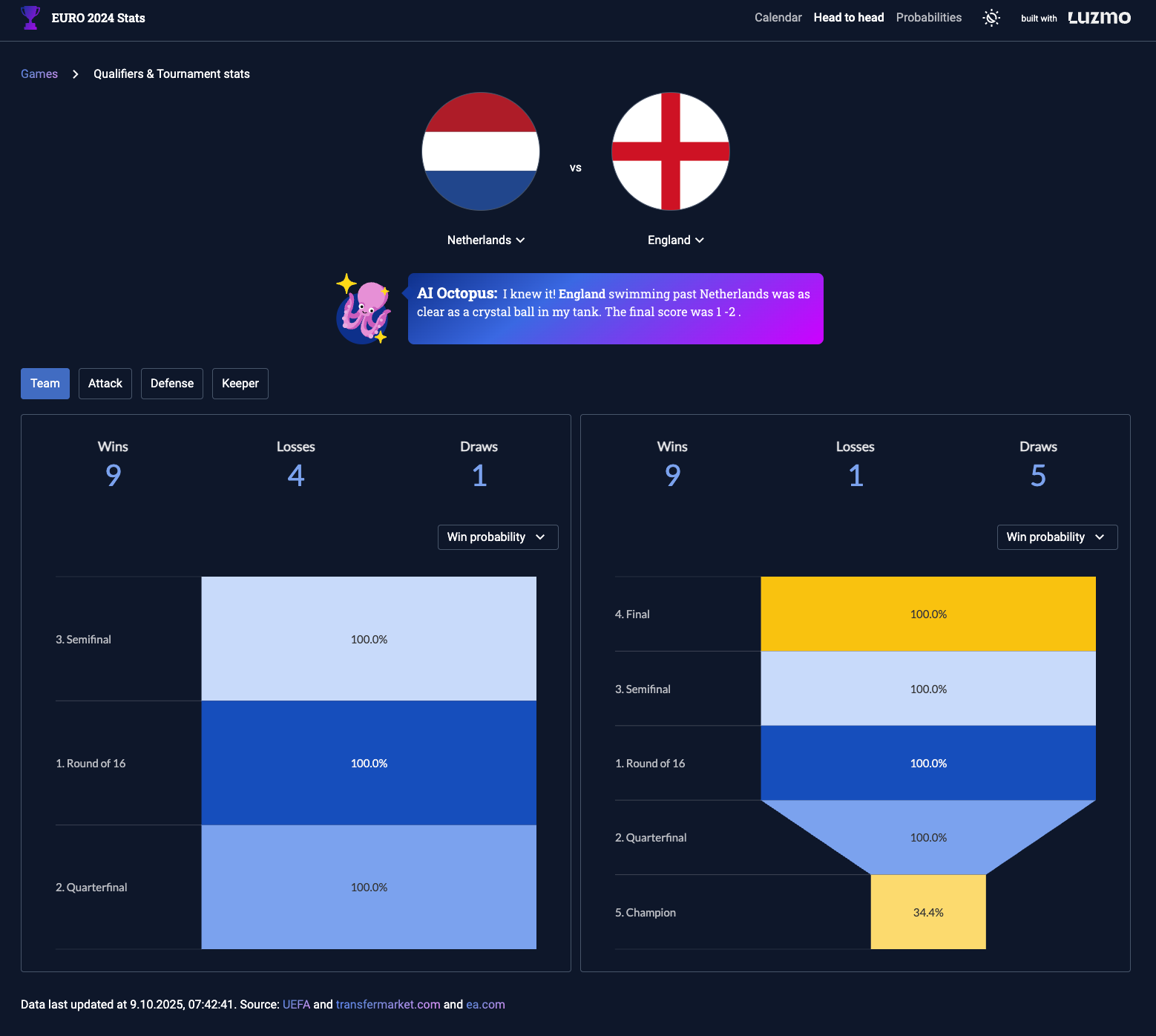

A good example of predictive analytics in action is this soccer app that forecasted match outcomes during EURO 2024. An LLM summarized predictions in natural language, while interactive visualizations allowed users to compare team metrics side by side — combining forecasting with explanation and exploration in one experience.

Predictive analytics works best when it’s tightly connected to visualization and decision-making. Forecasts need to be understandable, explorable, and grounded in real data so teams can trust the results and act on them with confidence.

As insights deepen and predictions become more ambitious, teams often discover what’s missing. Forecasts may lack confidence, segments may feel incomplete, or follow-up questions may remain unanswered. That’s typically the signal to revisit data collection.

Data collection is the foundation of any analytics or AI-driven workflow. Without the right data, meaningful insight simply isn’t possible. The goal is to capture inputs that accurately reflect the reality you want to understand or predict — whether that’s user behavior logs, transaction records, outreach metrics, analytics events, or external data sources.

Effective data collection requires intention. Data should be gathered consistently, labeled or tagged where needed, and stored in formats that remain easy to retrieve and analyze later. When data originates from multiple sources — such as internal databases, external APIs, logs, or third-party services — it’s important to plan how those sources will be integrated and unified.

Poorly aligned schemas, missing identifiers, or inconsistent formats can break analysis downstream and limit what AI can do. By designing collection pipelines with integration in mind, teams avoid unnecessary friction later in the analytics process.

Strong data collection practices make everything that follows easier. A solid pipeline reduces manual work, minimizes integration issues, and supports scalable, repeatable analytics — allowing insights, visualizations, and predictions to improve over time rather than degrade as complexity grows.

Collecting data is only the first step. Before analytics or AI models can be trusted, that data needs to be cleaned and validated. Raw datasets often contain duplicates, missing values, inconsistent formats, typos, or corrupted records. Without cleaning, any analysis — and especially predictive modeling — rests on shaky ground.

Data cleaning focuses on turning raw inputs into reliable, analysis-ready datasets. This typically involves:

Clearing this “noise” improves the accuracy and reliability of insights. When data is clean, patterns are easier to detect, forecasts become more trustworthy, and decisions are less likely to be skewed by errors or inconsistencies.

It’s also worth noting that data preparation (including cleaning) often represents the largest share of analytics work. Many data projects spend more time preparing data than building models or visualizations. While AI can automate parts of this process, clean inputs remain essential: AI amplifies the quality of your data, both good and bad.

Well-maintained, clean datasets close the loop between collection and analysis. They make it easier to iterate, scale analytics efforts, and support more advanced use cases such as AI-driven forecasting and embedded analytics.

Insights only create value when they influence decisions. Data-driven decision-making means using analytics not just to understand what’s happening, but to guide what to do next — with clarity, confidence, and accountability.

When teams rely on real data instead of assumptions or gut feeling, decisions become easier to justify and easier to evaluate. A complete analytics stack — solid data collection, clean inputs, thoughtful analysis, clear visualization, and reliable forecasting — makes it possible to react faster and allocate resources more effectively.

Data-driven decision-making helps organizations:

To make sure analytics truly informs decisions, it helps to follow a simple checklist:

When analytics is embedded directly into workflows and products, this loop becomes faster and more natural. Insights appear where decisions are made, follow-up questions are easy to ask, and teams can continuously refine both their data and their actions.

That’s where AI-powered analytics delivers its real impact: not as a reporting layer, but as an everyday decision support system that evolves alongside the business.

AI-powered analytics can unlock tremendous value… but they also introduce real risks for data security and privacy. The more data your system ingests, the greater the responsibility to protect it.

With Luzmo IQ, you retain control over who sees what. Their platform includes an “access-control layer” that ensures only authorized datasets are queried, and that sensitive data is never exposed broadly.

Every IQ Agent request is routed through our ACL, offering strong user row-level filtering, and our Query Engine, for trusted aggregation & formula's.

In other words: the LLM cannot be compelled to release data it doesn't have access to, and is not relied upon for data accuracy. ~ Haroen

If you feed clean, compliant data into Luzmo and use its built-in security features (access control, aggregated query results), you can make the most of the power of AI-driven analytics while keeping privacy and data protection intact.

If you're building a software product, there’s no better time to bring embedded analytics into the mix. With Luzmo, you can quickly integrate interactive dashboards, real-time reports, and AI-driven insights directly inside your app, with no heavy BI infrastructure required.

Start giving your users instant access to data-driven insight, and make analytics a seamless part of their workflow.

Sign up for a free trial or book a demo to see Luzmo in action.

All your questions answered.

What exactly is AI data analysis, and how does it differ from traditional methods?

AI data analysis uses machine learning and intelligent algorithms to sift through large volumes of data to detect patterns, trends, and insights that would take humans far longer to find manually. Traditional data analysis relies on human analysts applying statistical methods and visual inspection. In contrast, AI automates many steps, such as cleaning data, detecting anomalies, classifying information, and even suggesting hypotheses. This speeds up the analytics lifecycle and reduces manual effort.

Can results from AI data analysis be trusted? How do you validate them?

AI systems are only as accurate as the data and training they receive. To validate AI analysis: Use holdout or validation datasets that the model has never seen during training, similar to practices in machine learning validation. Compare AI outputs to known benchmarks or expert analysis to see if results align. Continuously monitor performance since data patterns can shift over time. By applying techniques like cross-validation and tracking performance on unseen data, you ensure that AI isn’t just “remembering” patterns but genuinely learning meaningful relationships.

What business problems does AI data analysis solve best?

AI excels in areas where data volume and complexity overwhelm manual analysis. Common use cases include: Predictive forecasting (e.g., sales or churn prediction). Anomaly detection for fraud or operational issues. Real-time trend identification across massive data streams. Automated reporting and pattern summarization that would be time-consuming manually. These capabilities allow organizations to make faster, data-driven decisions with less human overhead.

Do businesses need a data science team to use AI analysis?

Not necessarily. Many modern platforms embed AI capabilities directly into analytics interfaces, letting non-technical users ask natural-language questions and get insights without coding. That said, having analytics expertise helps with data governance, model interpretation, and making sure that AI outputs are applied responsibly, especially in regulated environments.

Build your first embedded data product now. Talk to our product experts for a guided demo or get your hands dirty with a free 10-day trial.